Application

Solutions for Machine Learning Systems

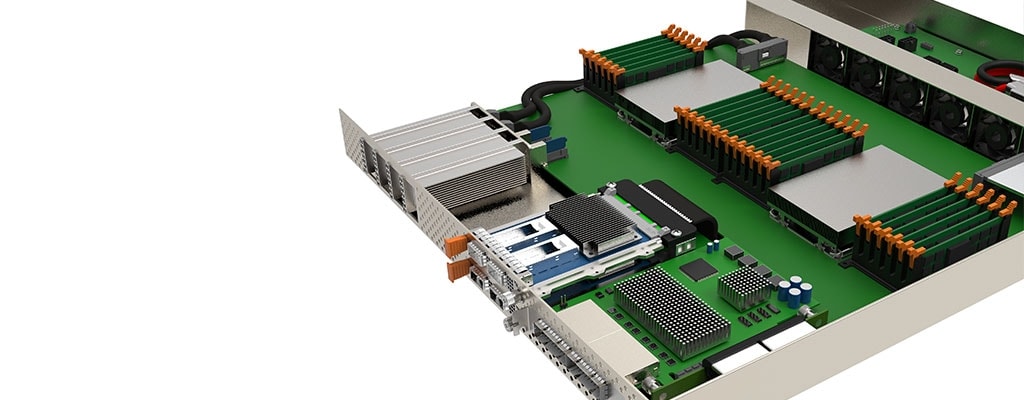

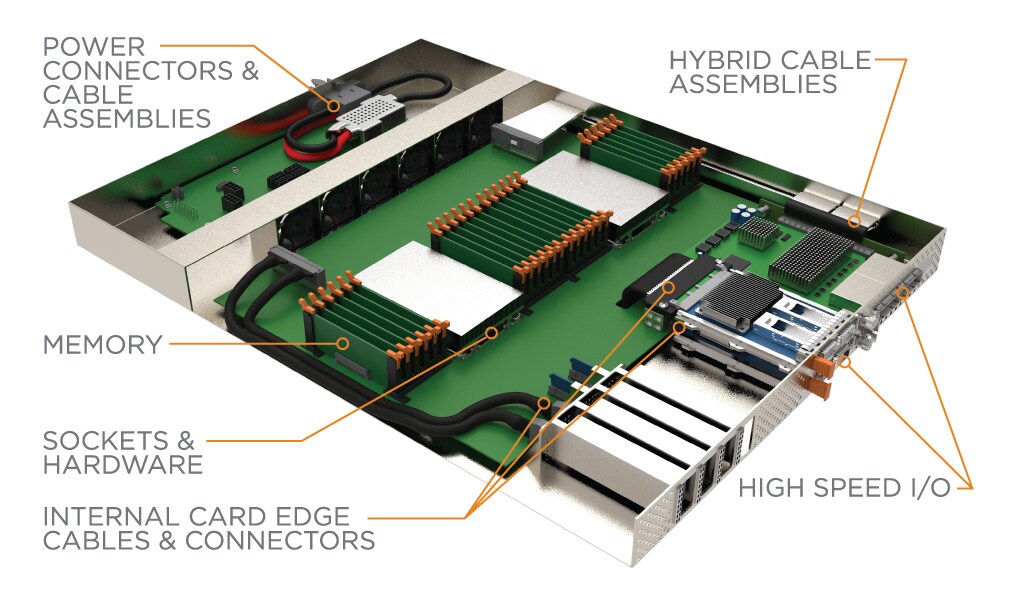

High speed and power connectivity solutions for machine learning and inference systems.

Our world is more connected than ever with billions of IoT devices at the edge collectively generating a tremendous amount of data. Insights from this data change the way we do business, interact with people, and live our daily lives. Generation of these insights occurs by processing the data through neural networks to recognize patterns and categorize the information. Once the neural network is trained, the model can be deployed to process and analyze new data. This is commonly known as inference. Speech recognition, object detection, image classification, and content personalization and recommendation engines are everyday applications that can take advantage of inferencing.

End users demand real time insights in these applications, driving inferencing to occur on mobile devices and at the edge. The different hardware accelerator types such as FPGAs, GPUs, and ASICs are used to classify and characterize the data. Each of these devices differs in processing horsepower and power consumption, with each having benefits depending on the workload. Some of these devices are leveraged for both training and inference, while some are dedicated to either.

- Field Programmable Gate Arrays (FPGAs) are commonly used to accelerate network and storage processes and offload these tasks from the CPU.

- Graphics Processing Units (GPUs) are designed to handle concurrent tasks and can process large data sets more efficiently than CPUs.

- Application Specific Integrated Circuits, or ASICs, are processors designed around specific workloads or tasks to allow for optimal power efficiency.

Hardware accelerators are often connected to other compute and storage devices, clustered together in large fabrics, and connect to the larger network. Network connections can leverage protocols like Ethernet and InfiniBand which normally use high speed I/O form factors like OSFP and QSFP-DD. PCIe is leveraged to connect storage devices, network interface cards (NICs), and hardware accelerators to the CPU. TE’s Sliver connector and cable family are compliant with the SFF-TA-1002 specification and allows these devices to connect and operate at PCIe Gen 5 and Gen 6 speeds.

Compute Express Link (CXL) and Gen-Z are emerging and quickly adopted protocols that aim to remove the memory bottleneck by enabling cache coherent memory. This helps drive new architectures, creating the need for external PCIe fabrics which use connectivity products like CDFP and Mini-SAS HD.

TE’s STRADA Whisper high speed backplane connector and cable system can enable system modularity by offering a blind mate connection at 112 Gbps PAM-4 speeds.

e

e

e

e

e

e